At Programiz, we're all about removing barriers that beginner programmers face when learning to code.

One of those barriers is the lack of a computer to program on. I was lucky enough to have access to a computer at an early age and grow my interest in them, but most people don't have that access.

In my own country Nepal, many people in the poorest parts of the country still don't have access to computers. Forget about personal computers, most students don't even have access to computer labs.

That's when I asked myself, what if ubiquitous smartphones that have penetrated even the poorest parts of the world could become computer labs? What if anyone could just enter a URL on a browser and start writing if statements and for loops?

I found some online compilers that are free to use and fulfill the purpose. However, there exists a problem with them. The problem was with the user experience. They are all bloated pieces of software trying to become full-fledged IDEs on the browser. There are just too many buttons: Run, Debug, Save, Execute, Share: which should I press?

The Programiz team decided to create our version of a mobile-friendly online compiler experience. This post is about the engineering that went behind the mammoth task of putting a Python shell on the public internet.

Approach 1: Spin Docker Containers on the fly

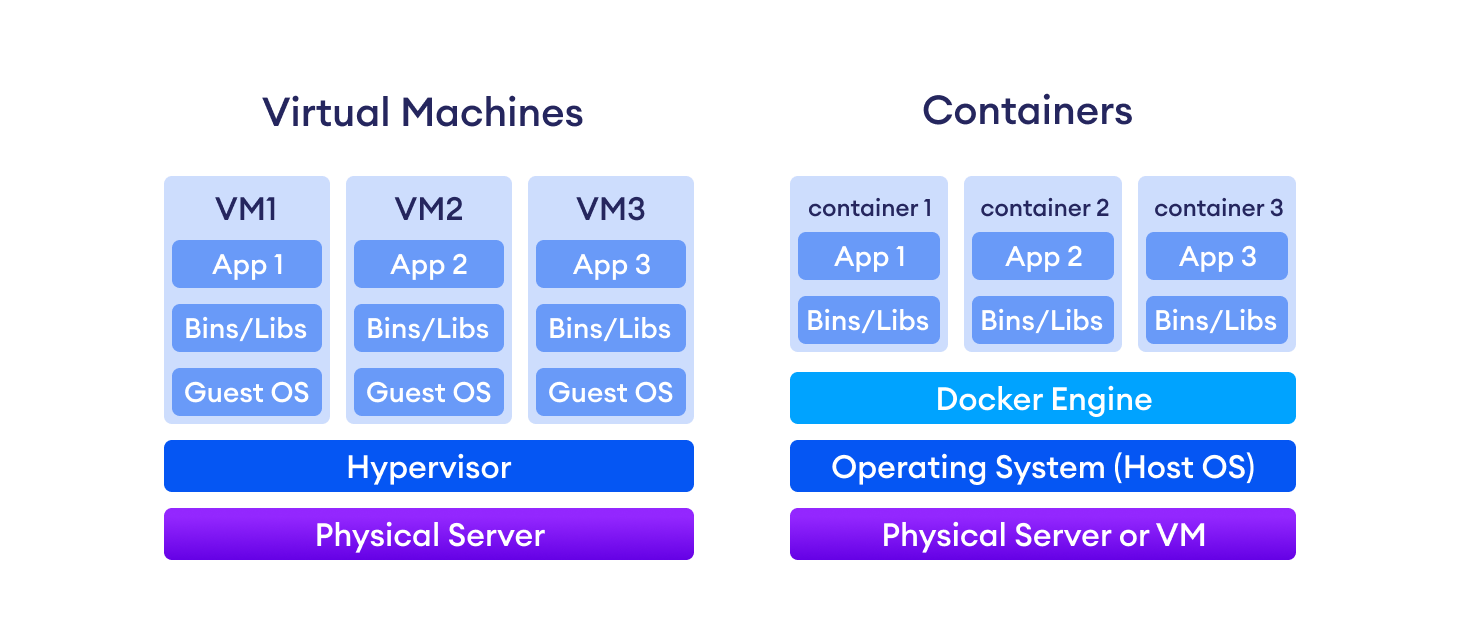

Our first approach was naive: to compile and run the user-submitted code in a Docker-based sandbox. Docker is a container for an application that contains the full configuration of the environment that the code needs to be run in. It leverages virtualization and builds layers over the OS Kernel to provide a consistent language to describe how to build your application.

For instance, if you want to host an Nginx server on an ubuntu system and copy over your static file to it, the relevant Dockerfile would look like:

FROM nginx:alpine

COPY . /usr/share/nginx/htmlThe Dockerfile that we wrote starts with an ubuntu image, installs all the programming languages we need to run in the compiler, and then starts a node server on it.

One thing to note about Docker and Dockerfiles is that this was the beginning of wide adoption of Infrastructure-as-code, where you can completely define the environment in which a piece of code needs to be run through code, transfer it to a completely different OS and expect it to just work.

Think of a Docker image as the sheet music of a song for any instrument, say a piano. If you gave the same sheet music to 100 different pianists, each would play the same notes but wouldn't sound exactly the same. A Docker container effectively packages the instrument along with the sheet music so that it always sounds the same.

Virtual Machines could also do that, but as you can see from the diagram above, by having multiple containers share the same host OS, Docker containers solved many of the resource-related issues from the Virtual Machine days.

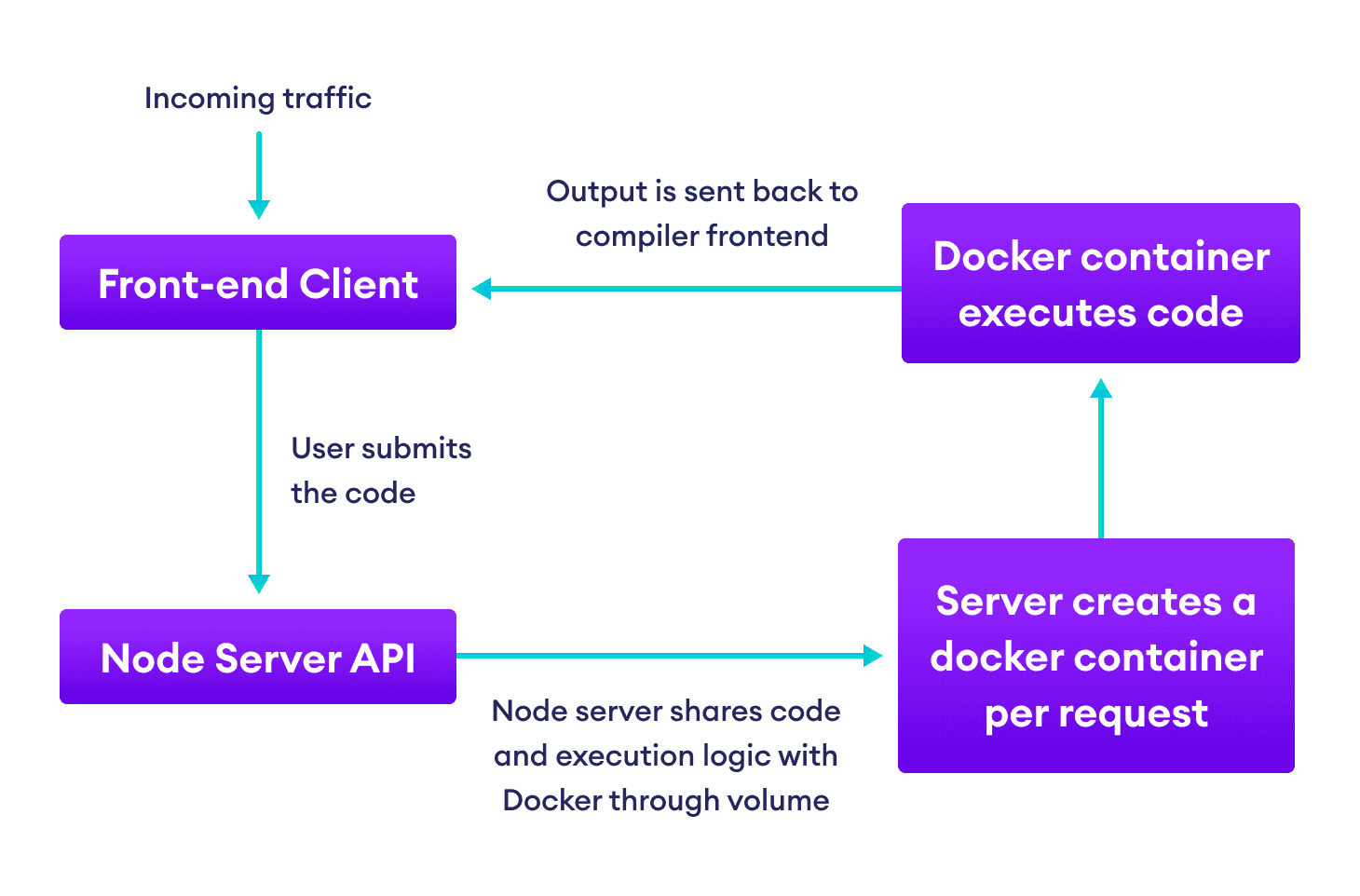

The initial version of the code sandbox we built was similar to the Nginx Dockerfile you saw above. A node.js script would expose an API for running programs. When the API was hit, it would spin up a Docker container with Python installed, run the code inside it and return the result. The communication between the docker container and the node server would happen through mounted volumes, a feature in Docker that allows Docker to share common resources (files in this case) with the host OS and with other Docker containers.

This solution would have been good enough if we were using it in a Python course that was behind a paywall. But our goal was to put a Python shell out in the open. It would be extremely costly to run so many containers on the fly.

If you're wondering, "Won't that be slow?". Well… not really. Container boot times are extremely fast these days and for most use cases, this would be good enough. We still run an online R compiler on our product datamentor.io that uses this approach and it's never been a problem. But we never had the confidence to put the R compiler we built with this approach for public use without a paywall. That had to change.

Approach 2: Prewarmed docker containers

We wanted our online compiler to be zippy, not just fast. So even the tiny amount of boot time for on-the-fly docker containers was a no-no.

Even if creating the image of my node server from a Docker image just took 1 to 2 seconds, that's a lot of time for a user who wants to run his program and get the results. We decided to run a cluster of running docker containers that would be ready to accept programs.

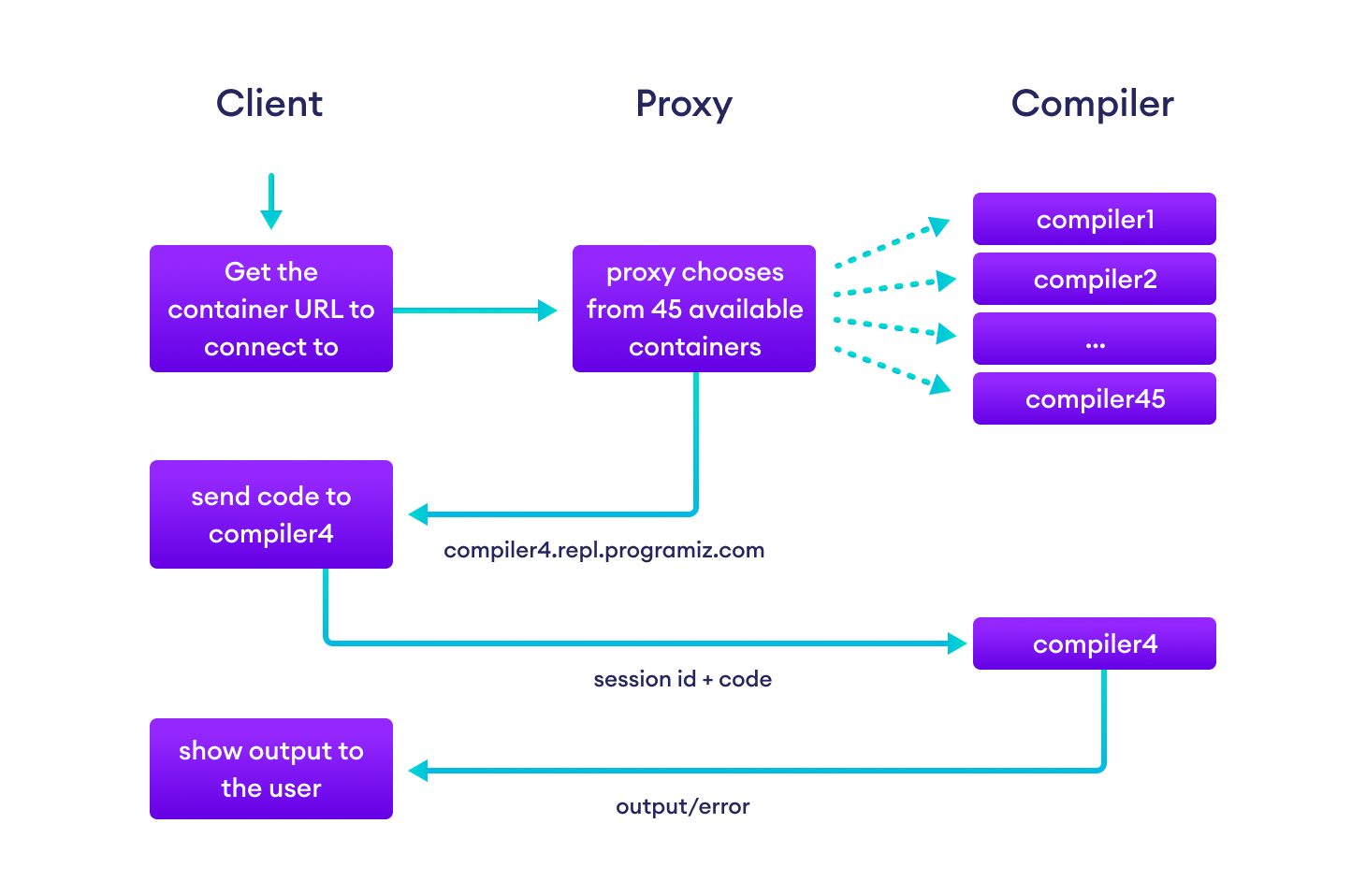

An HTTP request to a microservice would return the URL of a container from a list of 45 containers running a node server that exposed the terminal using node-pty, a package built for doing exactly that.

These containers were running node.js servers at different URLs like compiler1.repl.programiz.com, compiler2.repl.programiz.com, and so on. We could've chosen a number between 1 and 45 randomly on the client-side but we wanted to leave space for future optimizations such as sending the id of the container that had the maximum amount of free resources.

The 45 compilers were behind a proxy server we wrote in node.js that also handled the SSL certificates. We deployed this cluster of 45 containers using Kubernetes (more on Kubernetes later) on the public cloud

This solution gave us the speed we were looking for. Even on production, it was able to handle our traffic.

However, I wasn't happy with a few things:

- Our workloads vary across the day. The 45 VMs would either be under a lot of pressure during peak time or sit around doing nothing during low periods.

- The deployment process was archaic. I was dynamically creating YAML spec files for Kubernetes stuff like deployments, services from shell scripts. Kubernetes doesn't expose containers by default and instead of using a LoadBalancer to spread the workload across the 45 pods (the logical equivalent of a container in Kubernetes), I would run 45 services, one for each pod and then use our custom proxy server to route traffic to these 45 services.

- The manual proxy that I built made set-up on a fresh machine difficult, we didn't want a setup where we would have to modify the /etc/hosts file for local development.

Overall, it felt like we weren't really using the power of Kubernetes and only using it for deploying Docker to production. Just reading the Kubernetes spec files was a pain because they contained many placeholders that had to be replaced by shell scripts before they could be useful.

Approach 3: Unleash Kubernetes

The challenge now was to rethink the infrastructure of the whole setup.

As the technical lead for the project, I took a week off from work and bought a Kubernetes course. I revisited basic DevOps concepts like process management, CPU and memory management, etc.

I also learned about subtle things in Docker like the difference between ENTRYPOINT and CMD commands and then took the full Kubernetes Certified Developer course to learn and practice the concepts behind Kubernetes. It felt like running my first for loop again.

While creating Docker images using Dockerfile was akin to Infrastructure-As-Code, Kubernetes takes it to the next level with Infrastructure-As-Data.

Infrastructure-As-Data is a move from declarative programming to imperative programming. You go from telling the computer "What it needs to do" to "What needs to be done".

You do that by writing a YAML file that describes what you want to get done in a syntax that is defined by the Kubernetes API. YAML is like the US dollar, it's a data currency that every programming language accepts.

You can then provide this YAML file as an offering to the Kubernetes gods (Azure, Google Cloud Platform, AWS, DigitalOcean - you can take your pick) and they will pull your container from Docker Hub and run your app on production. Docker Hub is a repository for Docker images that works just like npm or ruby gems or pip.

Even Docker provides an Infrastructure-As-Data alternative to Dockerfile in the form of Docker Compose. Github Actions also works on the same philosophy, you just describe what needs to be done in a YAML file based on a specification, and GitHub handles the rest.

Going into the details of how Kubernetes works is beyond the scope of this article. So I'll move on to how we used some of the features of Kubernetes to make sure our python compiler handles dynamic traffic and doesn't go down.

Resource Limits on Kubernetes Pods

Just like a Docker image was akin to the specification of music as Musical Instrument + Sheet Music, a Kubernetes pod is a Docker Container + its Kubernetes Specification.

A pod definition file contains information like

- What resources should I be able to consume?

- Where should I pull the Docker image from?

- How do I identify myself to other Kubernetes elements?

- What should I run on the Docker container when it starts?

A Kubernetes deployment is another abstraction layer above pods that also describes how a pod should be deployed in the Kubernetes cluster.

A deployment adds more information on top of a pod specification such as

- How many replicas or copies of this pod should the Kubernetes cluster run?

- If the Docker image is in a private repository, where should I get the secret information I need to pull from that private repository?

It's just layers and layers of abstraction that make life easier for developers and system administrators alike.

resources:

requests:

memory: "0.5G"

cpu: "0.5"

limits:

memory: "1.5G"

cpu: "1"The lines above tell Kubernetes to allocate 500MB of ram and 0.5 cores of CPU to the pod and allow it to expand up to 1.5GB and 1 core respectively. If a pod consistently crosses it's limit, then it is evicted from the cluster and a new pod takes its place.

Kubernetes doesn't automatically remove Evicted pods from its cluster. I understand the reasoning behind this, a sysadmin might want to look at the reasons why the pod got evicted. Since ours is a simple application where all users are performing the same operation, I didn't want to bother with Evicted pods so I wrote a GitHub action that runs a cleanup script to remove Evicted pods on a schedule.

name: Remove evicted pods every hour

on:

schedule:

- cron: "0 */1 * * *"

jobs:

delete-evicted-and-crashed-pods-from-kube-cluster:

runs-on: ubuntu-latest

steps:

- name: Setup Digital Ocean Client

uses: matootie/[email protected]

with:

personalAccessToken: ${{ secrets.DIGITALOCEAN_PERSONAL_ACCESS_TOKEN }}

clusterName: programiz-compiler

expirationTime: 300

- name: Remove evicted pods

run: |

kubectl get pods | grep Evicted | awk '{print $1}' | xargs --no-run-if-empty kubectl delete podThis example shows the power of being able to Infrastructure-As-Data across the board. :)

Readiness and liveness probes

In our previous setup of 45 pods running behind a proxy, we had no way to stop traffic from going to an unresponsive pod.

Enter readiness and liveness probes, which are parts of a Kubernetes pod specification designed to allow the developer to define the requirements for sending traffic to a pod.

readinessProbe:

tcpSocket:

port: 3000

initialDelaySeconds: 10

periodSeconds: 3

livenessProbe:

tcpSocket:

port: 3000

periodSeconds: 3There are two parts to it:

- The readiness probe waits 10 seconds after a pod is created and attempts a TCP connection handshake at port 3000. If that handshake doesn't succeed, it keeps on trying every 3 seconds. A pod will not receive any traffic until that handshake is successful.

- The liveness probe attempts a TCP socket handshake with our node server running at port 3000 (internally) every 3 seconds, and stops directing traffic to it if it doesn't respond.

You could replace tcpSocket with httpGet and have a REST API endpoint that acts as a health check for your pod.

In less than 10 lines, Kubernetes does all the heavy lifting to make sure that our users get the best experience. Even if someone runs some malicious code or infinite loop in our compiler, the liveness probe quickly responds by stopping traffic to that pod until it becomes responsive again.

Deny Egress Traffic

One of the problems of allowing people to run remote code execution on your server is that people will try to run malicious scripts and mine bitcoin and what not. Our goal was to provide a fast compiler for beginners looking to run simple python scripts online while they are grappling with beginner concepts like for loops and object-oriented programming.

So I took a shortcut and put in a network policy that denies egress traffic from the node server.

metadata:

name: programiz-compiler-pod

labels:

app: programiz-compiler

type: server

networkpolicy: deny-egressnetworkpolicy: deny-egress is just a label here. There is another Kubernetes spec file that defines the Network policy and applies it to all pods that have the label networkpolicy: deny-egress.

This network policy file works independently from the pod. I can apply this label to any pods in the future and expect the network policy to kick in automatically.

Autoscaling Based on Traffic Volume

With Kubernetes deployments, we can choose how many replicas of the pods should be run. Another Kubernetes feature called services can then be used to distribute traffic across these pods based on different routing algorithms that can also be defined according to the Kubernetes specification.

In my previous setup, I was actually creating 45 separate deployments, not one deployment with 45 replicas. And I was stuck with that number during both low traffic and peak traffic times.

Kubernetes provides a feature called HorizontalPodAutoscaler that you can use to define the minimum and the maximum number of replicas of your pod that should be created.

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: programiz-compiler-autoscaler

spec:

minReplicas: 25

maxReplicas: 50

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: programiz-compiler-deployment

targetCPUUtilizationPercentage: 80It simply tells the Kubernetes cluster to maintain between 25 to 50 replicas of our compiler server to maintain the CPU Utilization percentage always below 80.

For instance, if the average CPU utilization across our cluster goes above 80, then Kubernetes will keep provisioning nodes and redirecting traffic to them until the CPU utilization becomes less than 80. Later when the traffic goes down and the CPU utilization for some pods becomes less than 40 (half of 80), then Kubernetes starts downsizing the cluster automatically.

The story doesn't end here though. Pods are deployed on Kubernetes worker nodes that have limited specs. For instance, since we require 0.5 cores of CPU and 500MB of memory for each replica, we can only fit 4 of those on a 2 core 8 gig node.

If there are not enough nodes available for the pods that the HorizontalPodAutoscaler creates, then they will just stay in Pending state and not be able to accept traffic.

This is where node autoscaling comes into play.

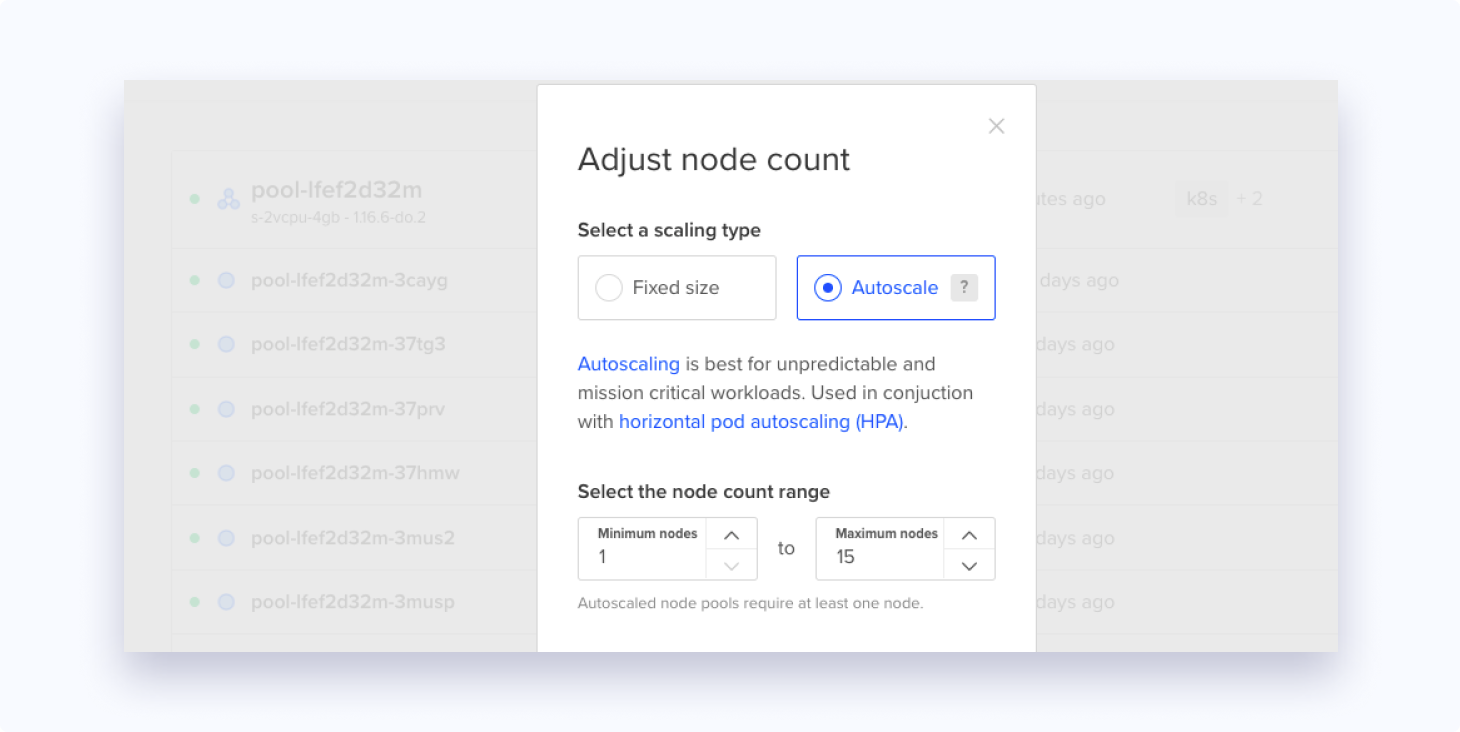

Our Kubernetes cluster is set to autoscale from 1 worker node to 15 based on how many pod replicas our HorizontalPodAutoscaler(HPA) has told our master node to spin up.

If our tool becomes very popular and even the 50 replicas aren't enough, then technically we could increase the maxReplicas number to 60 (4x15). If even that's not enough, we can always change the maximum nodes that the cluster can scale to.

Just like HPAs, the node autoscaler also gets rid of nodes that aren't being used automatically if the HPA scales down the number of replicas. This downscaling saves us a lot of money during low traffic times when the entire cluster just runs on 25 replicas.

LoadBalancing & Caching

LoadBalancing is another area where Kubernetes and its concepts of imperative programming and Infrastructure-as-data shine. Kubernetes provides a solution that creates a single entry point for all your services, just like an Nginx reverse proxy. Nginx isn't the only one supported, you can use other proxies like HAProxy and Traefik too.

In our case, we just created an Nginx load balancer that routes to either our backend or frontend services based on the url. Certificate management is also done at this layer using another feature of Kubernetes called ClusterIssuer, which takes care of SSL certificate management under the hood.

Adding more features on top of the Nginx reverse proxy doesn't require manually editing the nginx.conf file. There is a field called annotations where you can add an annotation for the feature you want and it will just work.

For instance, since most of our users are repeat users, we wanted to cache CSS, JS, fonts, and static assets. Doing this was just easy as cake, I just had to add a simple annotation on our ingress YAML file.

annotations:

nginx.ingress.kubernetes.io/configuration-snippet: |

if ($request_uri ~* \.(css|js|gif|jpe?g|png|svg|woff2)) {

add_header Cache-Control "public, max-age=31536000";

}If there is a critical front-end update that I want to force our users to download, I can just change the reference to build.js in our index.php as build.js?v=34tssdaf or any random string to invalidate the cache.

Prevent Attacks On The Whole Cluster

When we looked at the logs, we noticed that a lot of people were running infinite loops and programs that would access the file system to see what happens. I don't blame them, anybody would be tempted.

But some users were going out of their way to stop the compiler from working, running selenium scripts that would repeatedly open our compiler to start a session and run infinite loop programs or other malicious code that would render pods unresponsive.

Eventually, these pods would be evicted and new pods created but again new sessions would be redirected to these new pods and an attacker could potentially make all our pods unresponsive at once.

Fortunately, there are annotations in the Nginx controller definition YAML specification that you can use to tell Kubernetes to set a cookie to identify which pod a user was directed to when the session started. In subsequent sessions, Kubernetes will detect the cookie and use it to send the user back to the same pod. This is called session affinity in Kubernetes speak.

annotations:

nginx.ingress.kubernetes.io/affinity: "cookie"

nginx.ingress.kubernetes.io/session-cookie-name: "route"

nginx.ingress.kubernetes.io/session-cookie-expires: "172800"The session affinity field also accepts other options like "IP" that will redirect sessions from the same IP to the same container using hashing techniques.

With just three lines of configuration, I was able to implement sticky sessions. Doing so with Nginx reverse proxy and the declarative programming route would have taken days to get this to work.

Automated Deployments and Development Workflow

For me, a project is never complete unless it has an automated deployment process. Automated deployment lowers the barrier for other members of your team to contribute.

The GitHub actions script that deploys the Kubernetes cluster is not complex at all, I'll just leave it here for anyone wishing to know how a Kubernetes cluster is deployed.

We check out the latest code, build a Docker image based on Dockerfile that is checked in with the code, push that so it becomes the latest image on our Docker Hub private repo, and then ask Kubernetes to pull it from Docker Hub. Another win for Infrastructure-as-code!

name: CD-prod

on:

push:

branches: [ master ]

jobs:

build-and-deploy:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

# Required to pull from private docker registry

- name: Docker Login

uses: Azure/docker-login@v1

with:

username: ${{ secrets.DOCKERHUB_USERNAME }}

password: ${{ secrets.DOCKERHUB_PASSWORD }}

# To enable kubectl and deploy to cluster

- name: Setup DigitalOcean Client

uses: matootie/[email protected]

with:

personalAccessToken: ${{ secrets.DIGITALOCEAN_PERSONAL_ACCESS_TOKEN }}

clusterName: programiz-compiler-cluster

expirationTime: 300

- name: Build Prod Image

run: |

docker build -t parewahub/programiz-compiler-server:"$GITHUB_SHA" -t parewahub/programiz-compiler-server -f Dockerfile .

#this pushes both the tags $GITHUB_SHA and latest

docker push parewahub/programiz-compiler-server

# Force rollout of deployment

- name: Force repulling of prod image on Kubernetes

run: kubectl rollout restart deployment programiz-compiler-deploymentWhat's next?

Infrastructure-as-data allowed me to introduce complex features into our online compiler app without doing a lot of coding. Features of Kubernetes such as session affinity, liveness and readiness probes, Nginx Ingress, ClusterIssuer, Deployments, and many more helped make our application robust.

As of this writing, there have been zero crashes on any of our pods for the last 48 hours. The dream of providing access to the world of programming for anyone with a smartphone is coming true.

Peter Thiel says you have to make your product 10X better than your biggest competitor. We ruthlessly pushed for the highest level of performance and ease of use and our online compiler has already risen to #1 on Google SERP for the keyword "Python Online Compiler".

We are now working to implement the compiler throughout our website for all the programming languages we support. Since I've built the foundations right, I hope our users will be able to play with all the code on Programiz very soon.

Subscribe to Programiz Blog!

Be the first to receive the latest tutorial from Programiz by signing up to our email subscription. Also more bonus like inside looks on the latest feature and many more.